Zhipu AI Launches the Third Generation Base Large Model ChatGLM3

On October 27th, Zhipu AI launched its fully self-developed third-generation base large model ChatGLM3 and related series products at the 2023 China Computer Conference (CNCC). The ChatGLM3 launched this time adopts an innovative multi-stage enhanced pre training method, making the training more comprehensive

On October 27th, Zhipu AI launched its fully self-developed third-generation base large model ChatGLM3 and related series products at the 2023 China Computer Conference (CNCC). The ChatGLM3 launched this time adopts an innovative multi-stage enhanced pre training method, making the training more comprehensive.

Zhipu AI CEO Zhang Peng made a new product launch on-site and demonstrated the latest product features in real-time. It is reported that through richer training data and better training plans, the ChatGLM3 launched by Zhipu AI has stronger performance. Compared with ChatGLM2, MMLU increased by 36%, CEval increased by 33%, GSM8K increased by 179%, and BBH increased by 126%.

At the same time, ChatGLM3 aims to achieve iterative upgrades of several new functions towards GPT-4V, including the multimodal understanding ability of CogVLM - graph recognition semantics, and has obtained SOTA on more than 10 international standard graph and text evaluation datasets; The code enhancement module CodeInterpreter generates and executes code based on user needs, automatically completing complex tasks such as data analysis and file processing; Web search enhancement WebGLM - Access search enhancement, which can automatically search for relevant information on the internet based on questions and provide reference to relevant literature or article links when answering. The semantic and logical capabilities of ChatGLM3 have been greatly enhanced.

ChatGLM3 also integrates self-developed AgentTuning technology, activating the ability of model agents, especially in intelligent planning and execution, which has increased by 1000% compared to ChatGLM2; We have launched complex scenarios such as native support tool calls, code execution, games, database operations, knowledge graph search and inference, and operating systems for domestic large models.

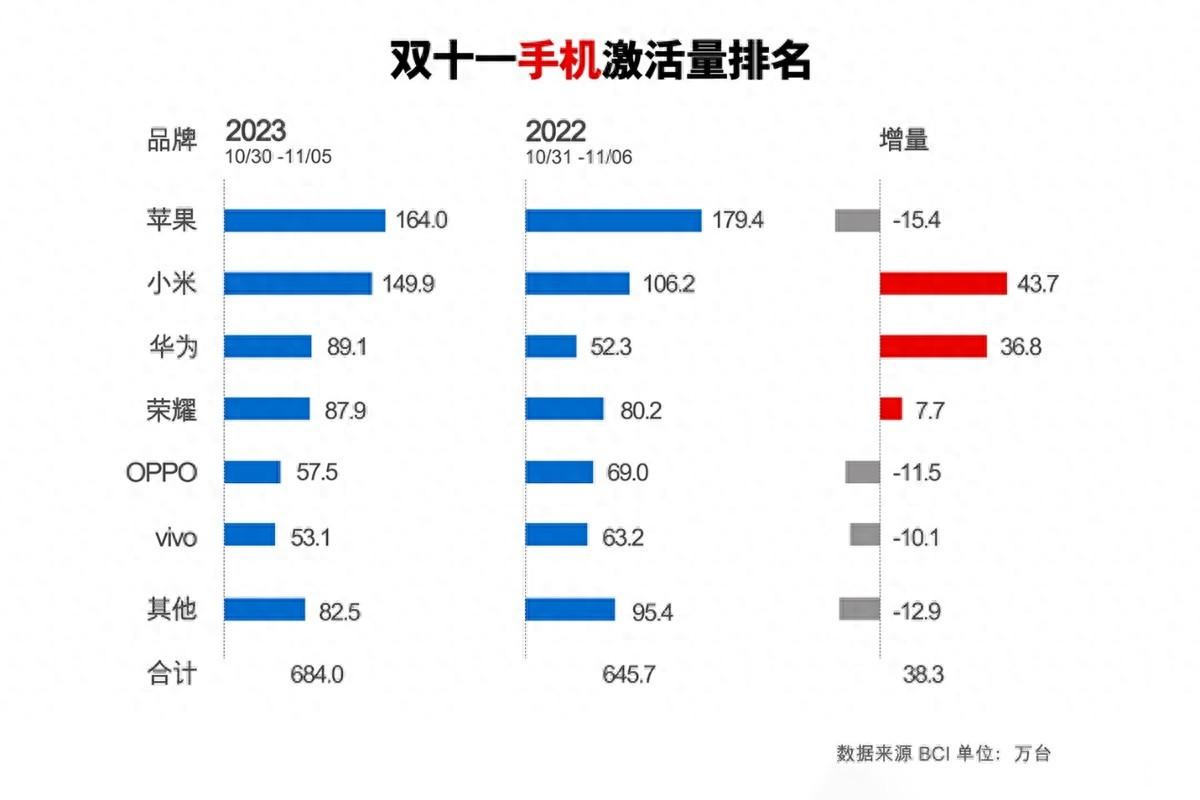

In addition, ChatGLM3 has launched mobile deployable end test models ChatGLM3-1.5B and ChatGLM3-3B, supporting multiple mobile phones including vivo, Xiaomi, Samsung, as well as car platforms, and even supporting inference of CPU chips on mobile platforms, with a speed of up to 20 tokens/s. In terms of accuracy, the 1.5B and 3B models have similar performance to the ChatGLM2-6B model on the open benchmark.

Based on the latest efficient dynamic inference and memory optimization technologies, ChatGLM3's current inference framework, under the same hardware and model conditions, is compared to the current best open source implementation, including the one launched by the University of Berkeley; The latest versions of vLLM and HuggingFaceTGI have increased inference speed by 2-3 times and reduced inference costs by twice, with only 0.5 points per thousand tokens.

Disclaimer: The content of this article is sourced from the internet. The copyright of the text, images, and other materials belongs to the original author. The platform reprints the materials for the purpose of conveying more information. The content of the article is for reference and learning only, and should not be used for commercial purposes. If it infringes on your legitimate rights and interests, please contact us promptly and we will handle it as soon as possible! We respect copyright and are committed to protecting it. Thank you for sharing.(Email:[email protected])