The founder of Google DeepMind proposed a universal artificial intelligence rating, and ChatGPT is just a beginner

The DeepMind research team led by Shane Legg, co-founder of Google DeepMind, has published a paper.On November 4th local time, the DeepMind research team led by Shane Legg, co-founder of Google DeepMind, released a paper titled "Levels of AGI: Operationalizing Progress on the Pathto AGI", proposing a clearer definition of General Artificial Intelligence (AGI) and developing an AGI classification framework similar to the L1-L5 level of autonomous driving

The DeepMind research team led by Shane Legg, co-founder of Google DeepMind, has published a paper.

On November 4th local time, the DeepMind research team led by Shane Legg, co-founder of Google DeepMind, released a paper titled "Levels of AGI: Operationalizing Progress on the Pathto AGI", proposing a clearer definition of General Artificial Intelligence (AGI) and developing an AGI classification framework similar to the L1-L5 level of autonomous driving.

For a long time, AGI has been a vague concept that can be roughly understood as' about as intelligent as humans'. The research team wrote in the paper, "We hope to provide a common language for comparing models, assessing risks, and measuring what stage we have reached on the road to AGI

The Six Principles of AGI Definition

The research team did not summarize AGI in a single sentence, but instead proposed that any definition of AGI should meet six principles:

Focus on ability, not process.That is to say, focus on what AGI can accomplish, rather than the mechanism for completing tasks. This approach of focusing on capabilities allows us to exclude certain content from the requirements of AGI, such as achieving AGI does not mean that intelligent agents think or understand in a human like manner, or achieving AGI does not mean that intelligent agents have consciousness or perception (emotional abilities), as these traits not only focus on processes, but are currently not measurable through recognized scientific methods.

Focus on universality and performance.The research team believes that universality and performance are key components of AGI.

Focus on cognitive and metacognitive tasks.Most definitions of AGI focus on cognitive tasks and can be understood as non physical tasks. Although there has been some progress in robotics technology, compared to non physical capabilities, the physical capabilities of AI systems seem to lag behind. The ability to perform physical tasks does indeed increase the versatility of intelligent agents, but we believe that this should not be seen as a necessary prerequisite for achieving AGI. On the other hand, metacognitive ability (such as the ability to learn new tasks or the ability to know when to request clarification or assistance from humans) is a key prerequisite for intelligent agents to achieve universality.

Focus on potential, not deployment.Proving that a system can perform a set of necessary tasks at a certain level of performance should be sufficient to declare it an AGI. Deploying such a system in the real world should not be an inherent requirement of AGI definition.

Focus on Ecological Validity.We would like to emphasize the importance of choosing tasks that are consistent with what people value in the real world (i.e., ecologically effective).

Focus on the path to AGI, rather than a single endpoint.The research team proposes a five level classification of AGI, where each AGI level is associated with a clear set of metrics/benchmarks, and each level introduces identified risks and changes in human-machine interaction paradigms. For example, the definition of labor substitution proposed by OpenAI is more in line with the "master AGI".

AGI five level classification

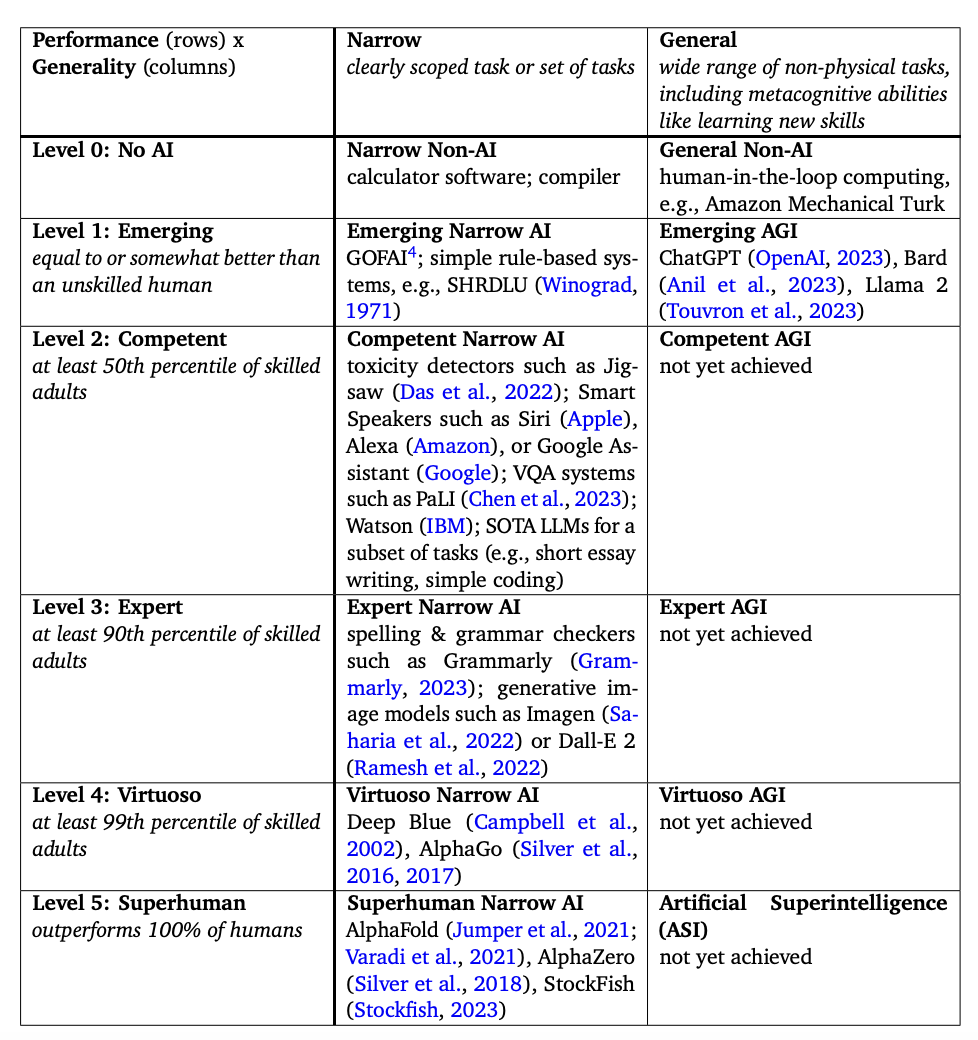

Based on capability depth (performance) and breadth (universality), AGI is classified as non AI, beginner, intermediate, expert, master, and super intelligence.

Based on the depth of capability (performance) and breadth (universality), this study categorizes AGI as non AI, beginner, intermediate, expert, master, and super intelligence. A single system may span different levels in the classification system. As of September 2023, cutting-edge language models such as OpenAI's ChatGPT, Google's Bard, and Meta's Llama2 have demonstrated proficient "intermediate" performance levels in certain tasks (such as short paper writing and simple coding), but are still at the "elementary" performance level in most tasks (such as mathematical ability and factual tasks).

The research team believes that overall, the current cutting-edge language models will be considered as Level 1 universal AI, known as "Junior AGI," until their performance level improves on a wider task set and meets the standards of Level 2 universal AI ("Proficient AGI").

At the same time, the research team reminds that acquiring stronger skills in specific cognitive domains may have a serious impact on AI safety, such as obtaining strong chemical engineering knowledge before mastering strong ethical reasoning skills may be a dangerous combination. It should also be noted that the speed of progress between performance or universality levels may be non-linear. The ability to learn new skills may accelerate the process to the next level.

In terms of performance and universality, the highest level in the classification is ASI (Artificial Super Intelligence). The research team defines' super intelligence 'performance as over 100% human. For example, the research team assumes that AlphaFold is the fifth level "domain specific superhuman AI" in the classification system, as its performance in a task of predicting the three-dimensional structure of proteins from amino acid sequences is higher than that of top scientists worldwide. This definition means that the Level 5 Universal AI (ASI) system will be able to perform various tasks at a level unmatched by humans.

In addition, this framework also means that such a "superhuman system" may be able to perform a wider range of tasks at lower levels than AGI, which can be understood as being able to achieve tasks that were originally completely impossible for humans to perform. The non human skills that ASI may possess may include neural interfaces (mechanisms for decoding thoughts by analyzing brain signals), oracular abilities (mechanisms for making high-quality predictions by analyzing large amounts of data), and the ability to communicate with animals (mechanisms such as analyzing their sound, brainwave, or body language patterns).

At the end of October, Laig stated in an interview with a technology podcast that he still adheres to the publicly expressed view that researchers have a 50% chance of achieving AGI by 2028. So what level does this specifically refer to? At present, he has not provided a clear explanation.

Disclaimer: The content of this article is sourced from the internet. The copyright of the text, images, and other materials belongs to the original author. The platform reprints the materials for the purpose of conveying more information. The content of the article is for reference and learning only, and should not be used for commercial purposes. If it infringes on your legitimate rights and interests, please contact us promptly and we will handle it as soon as possible! We respect copyright and are committed to protecting it. Thank you for sharing.(Email:[email protected])