How to prevent security risks in the era of large models

Our special correspondent to Wuzhen& Nbsp; Liu& Nbsp; Yang& Nbsp; Li XuanminWith the continuous popularity of generative artificial intelligence models represented by ChatGPT, global technology companies and research institutions are laying out their own large models. The rapid development of generative artificial intelligence and large models has also brought new challenges to network security

Our special correspondent to Wuzhen& Nbsp; Liu& Nbsp; Yang& Nbsp; Li Xuanmin

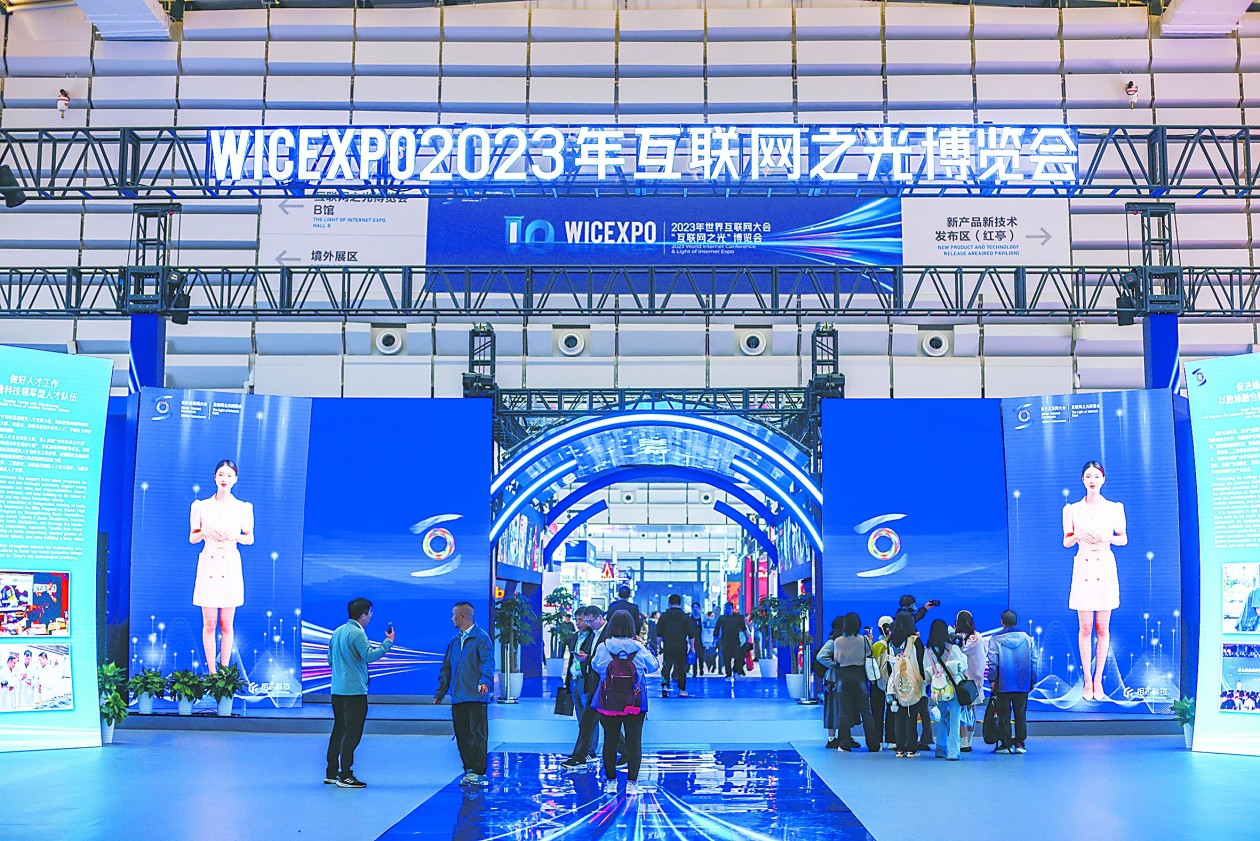

With the continuous popularity of generative artificial intelligence models represented by ChatGPT, global technology companies and research institutions are laying out their own large models. The rapid development of generative artificial intelligence and large models has also brought new challenges to network security. At the 2023 World Internet Conference Wuzhen Summit, which opened on the 8th, domestic and foreign security experts explained their understanding of the new challenges of network security in the era of artificial intelligence big models from their respective perspectives. A reporter from Global Times saw at the "Internet Light" Expo that most of the booths had exhibits related to big models, including a comprehensive solution for big model security that "defeats magic with magic".

What challenges does the artificial intelligence big model bring

At the "Artificial Intelligence Empowerment Industry Development Forum" held at the Wuzhen Summit of the World Internet Conference on the 9th, Chinese and foreign experts jointly released the "Research Report and Consensus Document on Developing Responsible Generative Artificial Intelligence" (hereinafter referred to as the report). The report mentions that in recent years, generative artificial intelligence has made breakthrough progress in understanding and generating text, code, images, audio and video, and is expected to significantly improve social productivity. At the same time, the report also uses a dedicated chapter to interpret the risks brought by generative artificial intelligence.

The report states that generative artificial intelligence technology has amplified technical security risks while iteratively upgrading. In terms of data, data feeding brings problems such as value bias, privacy leakage, and data pollution: the inherent bias of training data leads to biased content in the model; The massive amount of training data has expanded the risks of data security and privacy protection. In terms of algorithms, the generation characteristics and security vulnerabilities of algorithm models can lead to risks such as "hallucinations" or false information, and model attacks. The report also mentions two deeper risks: the reconstruction of human-machine relationships through generative artificial intelligence may lead to ethical misconduct in technology; Strong task processing ability can easily lead to human thinking dependence; The uneven development of generative artificial intelligence has further widened the gap in human social development.

Global Times reporters also experienced the security risks that generative artificial intelligence may bring to ordinary people at the "Internet Light" Expo. At a company booth focused on youth programming, reporters experienced a facelift experiment where "what they see may not necessarily be real". After standing in front of the machine for a few seconds, AI provided two face changing models based on the reporter's appearance characteristics. One was "Mr. Bean" in seconds, and the other was a bearded face. According to the booth staff, this is the science popularization of the risk of "changing faces" for teenagers.

A Chinese cybersecurity expert who has participated in the 10th World Internet Conference told Global Times reporters that in the past few years, we focused on cloud security, big data security, and Internet of Things security, but today big model security is a topic that must be taken seriously.

How to Ensure Security for Major Events with Large Models

According to public reports, during the 2012 London Olympics, multiple media outlets were hit by cyber attacks; The 2016 Rio Olympics were hit by large-scale APT attacks and DDoS attacks; The 2018 Pingchang Winter Olympics also suffered the largest cybersecurity incident in Olympic history. The Global Times reporter learned at the "Internet Light" Expo that the recently successfully held Hangzhou Asian Games is not only a competition for sports performance and large-scale event organization, but also a competition for cybersecurity attack and defense.

A reporter from Global Times saw a huge display screen at the "Internet Light" Expo site, dynamically and intuitively demonstrating the situational awareness and rapid response capabilities during the Hangzhou Asian Games network security process. According to Liang Hao, Vice President of Anheng Information, the MSS Asian Games Dome - the new generation active defense operation center displayed on site is a comprehensive operation center serving the security protection system of the Hangzhou Asian Games. It is actually defined based on the construction scope of this Asian Games, involving 88 competition and non competition venues throughout the entire Asian Games. Relevant safety details, measures, and issues can be timely grasped through the perspective of this operation center. If necessary, the relevant IT rooms in the specific venue can be checked at any time, and the security protection equipment can be checked in which slot. If there are any abnormalities, interruptions, or alarms, they can be promptly reported and disposed of.

It is reported that the number of athletes, scale of events, and number of venues at the Hangzhou Asian Games are approximately four times that of the Beijing Winter Olympics. If traditional methods are used, it may require thousands of people to complete security. However, in reality, the on-site security at this Asian Games only has over 400 people. How to meet security needs with fewer personnel on such a large scale is actually using large model technology. We have combined large models in the field of security to help on-site analysts quickly assist in research and provide solutions. Especially in terms of ensuring key activities, there is a high demand for timeliness. For example, during the Hangzhou Asian Games, over 26 million network attacks were observed, and automated correlation and blocking techniques were used throughout the entire interception process, including the use of artificial intelligence and the capabilities of large models Force

How to "defeat magic with magic"

In order to address the challenges posed by generative artificial intelligence and large models, leading domestic companies in the field of cybersecurity have also showcased their solutions at the "Internet Light" Expo. What impressed Global Times reporters the most was using AI to deal with AI and "defeating magic with magic". For example, at the Qi'anxin booth, the reporter saw a series of powerful protective capabilities provided by the "Big Model Guard". Alibaba booth also proposed a "big model security full link solution".

Zhou Hongyi, the founder of 360 Group, who participated in this World Internet Conference, stated in an interview with Global Times that all digital technologies are inevitably double-edged swords. With software, there will be vulnerabilities, and with vulnerabilities, they will be attacked. Large models of artificial intelligence are also inevitable. Zhou Hongyi believes that the security issues faced by artificial intelligence can be divided into three levels: primary/recent problems, intermediate/mid-term problems, and advanced/long-term problems. The primary/recent issues are technical attacks, namely network attacks, vulnerability attacks, data attacks, especially providing poor data to large models or contaminating their training data, which may lead to incorrect results. This type of problem is relatively easy to solve. The more difficult to solve are intermediate/mid-term issues, mainly related to content security. For example, large models can become great helpers for people and tools for bad people. It can help a hacker with low proficiency write better attack code and scam emails. How to make its content more controllable? How to prevent artificial intelligence big models from doing evil? This goes beyond the realm of technology. Although some people have built built-in so-called "security barriers" for large models, they are easily vulnerable to injection attacks or algorithm attacks. One of the solutions to this type of problem is to develop small-scale models specifically targeting malicious questions, allowing large models to filter through small models before answering user questions.

Zhou Hongyi believes that from a high-level/long-term perspective, a large model can integrate all human knowledge, and then communicate and prompt with humans. But for the future, if the ability of large models surpasses that of humans, can they still be willing to become human tools? "Zhou Hongyi said," My point is that the challenges brought by these technologies ultimately need to be solved by technology, and technology itself will continue to evolve. We cannot stop developing related technologies just because there are still problems that have not occurred in the future.

Disclaimer: The content of this article is sourced from the internet. The copyright of the text, images, and other materials belongs to the original author. The platform reprints the materials for the purpose of conveying more information. The content of the article is for reference and learning only, and should not be used for commercial purposes. If it infringes on your legitimate rights and interests, please contact us promptly and we will handle it as soon as possible! We respect copyright and are committed to protecting it. Thank you for sharing.(Email:[email protected])